Game Theory Finds Who is at Fault in Self-Driving Car Accidents

While research on self-driving cars have understandably focused on ensuring safety and traffic efficiency, little has been done to explore human drivers’ behavioral adaptation to self-driving cars. Will humans become less cautious as self-driving cars become the norm? In an accident involving an autonomous vehicle (AV) and a human driver, who is liable? If both are liable, how should the accident loss be split between them?

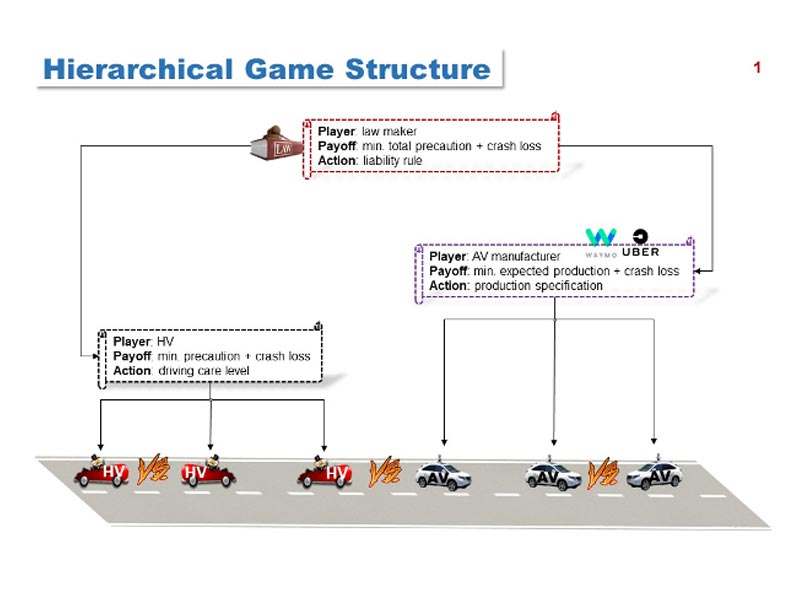

To tackle these questions, researchers at Columbia Engineering and Columbia Law School have developed a joint fault-based liability rule that can be used to regulate both self-driving car manufacturers and human drivers. They developed a game-theoretic model that describes the interactions among the law maker, the self-driving car manufacturer, the self-driving car, and human drivers, and examine how, as self-driving cars become more ubiquitous, the liability rule should evolve.

The researchers developed a sort of autonomous video game combining complex interactions between human drivers, between the AV manufacturer and human-driven vehicles, and between the law maker and other users. The game is then run with numerical examples to investigate the emergence of human drivers' “moral hazard,” the AV manufacturer's role in traffic safety, and the law maker's role in liability design.

The need for this kind of strategic thinking was made evident in a recent decision by the National Transportation Safety Board (NTSB) on an Uber crash that killed a pedestrian in Arizona. The NTSB split the blame among Uber, the company’s autonomous vehicle (AV), the safety driver in the vehicle, the victim, and the state of Arizona. The Engineering and Law researchers hope their analytical tools will inform AV policy-makers in their regulatory decisions and help mitigate uncertainty in the existing regulatory environment around AV technologies. Learn more.

Make Your Commitment Today